Blog Hiatus

24 January, 2017

There will be no more blog posts here for the foreseeable future. I have recently suffered a stroke, and my right arm and hand are useless.

A small corner of the world reflected in a small corner of my mind

24 January, 2017

There will be no more blog posts here for the foreseeable future. I have recently suffered a stroke, and my right arm and hand are useless.

12 January, 2016

I now have a retrospective department on this blog. I will re-publish those articles in English that are now on my web site. First out:

The Objectivist Validation of Individual Rights

Appendix: An Answer to Walter Block

Is Fractional Reserve Banking Compatible with Objectivism?

Joseph A. Schumpeter – Friend or Foe of Capitalism?

Objectivism versus “Austrian” Economics on Value

Reisman Insights Without George Reisman

Objectivism and “Austrian” Economics – compatible or not?

Quotes from Jean-Baptiste Say

Earlier, I have published My Life as a Translator.

Look out for more.

22 November, 2016

Recently on Facebook somebody linked to George Reisman’s essay Education and the Racist Road to Barbarism[1] and gave a couple of lengthy quotes:

In order to understand the implications, it is first necessary to remind oneself what Western civilization is. From a historical perspective, Western civilization embraces two main periods: the era of Greco-Roman civilization and the era of modern Western civilization, which latter encompasses the rediscovery of Greco-Roman civilization in the late Middle Ages, and the periods of the Renaissance, the Enlightenment, and the Industrial Revolution. Modern Western civilization continues down to the present moment, of course, as the dominant force in the culture of the countries of Western Europe and the United States and the other countries settled by the descendants of West Europeans. It is an increasingly powerful force in the rapidly progressing countries of the Far East, such as Japan, Taiwan, and South Korea, whose economies rest on “Western” foundations in every essential respect.

From the perspective of intellectual and cultural content, Western civilization represents an understanding and acceptance of the following: the laws of logic; the concept of causality and, consequently, of a universe ruled by natural laws intelligible to man; on these foundations, the whole known corpus of the laws of mathematics and science; the individual’s self-responsibility based on his free will to choose between good and evil; the value of man above all other species on the basis of his unique possession of the power of reason; the value and competence of the individual human being and his corollary possession of individual rights, among them the right to life, liberty, property, and the pursuit of happiness; the need for limited government and for the individual’s freedom from the state; on this entire preceding foundation, the validity of capitalism, with its unprecedented and continuing economic development in terms of division of labor, technological progress, capital accumulation, and rising living standards; in addition, the importance of visual arts and literature depicting man as capable of facing the world with confidence in his power to succeed, and music featuring harmony and melody.

And:

For the case of a Westernized individual, I must think of myself. I am not of West European descent. All four of my grandparents came to the United States from Russia, about a century ago. Modern Western civilization did not originate in Russia and hardly touched it. The only connection my more remote ancestors had with the civilization of Greece and Rome was probably to help in looting and plundering it. Nevertheless, I am thoroughly a Westerner. I am a Westerner because of the ideas and values I hold. I have thoroughly internalized all of the leading features of Western civilization. They are now my ideas and my values. Holding these ideas and values as I do, I would be a Westerner wherever I lived and whenever I was born. I identify with Greece and Rome, and not with my ancestors of that time, because I share the ideas and values of Greece and Rome, not those of my ancestors. To put it bluntly, my ancestors were savages–certainly up to about a thousand years ago, and, for all practical purposes, probably as recently as four or five generations ago. . . .

There is no need for me to dwell any further on my own savage ancestors. The plain truth is that everyone’s ancestors were savages–indeed, at least 99.5 percent of everyone’s ancestors were savages, even in the case of descendants of the founders of the world’s oldest civilizations. For mankind has existed on earth for a million years, yet the very oldest of civilizations–as judged by the criterion of having possessed a written language–did not appear until less than 5,000 years ago. The ancestors of those who today live in Britain or France or most of Spain were savages as recently as the time of Julius Caesar, slightly more than 2,000 years ago. Thus, on the scale of mankind’s total presence on earth, today’s Englishmen, Frenchmen, and Spaniards earn an ancestral savagery rating of 99.8 percent. The ancestors of today’s Germans and Scandinavians were savages even more recently and thus today’s Germans and Scandinavians probably deserve an ancestral savagery rating of at least 99.9 percent.

It is important to stress these facts to be aware how little significance is to be attached to the members of any race or linguistic group achieving civilization sooner rather than later. Between the descendants of the world’s oldest civilizations and those who might first aspire to civilization at the present moment, there is a difference of at most one-half of one percent on the time scale of man’s existence on earth.

These observations should confirm the fact that there is no reason for believing that civilization is in any way a property of any particular race or ethnic group. It is strictly an intellectual matter–ultimately, a matter of the presence or absence of certain fundamental ideas underlying the acquisition of further knowledge.

One commenter wrote:

Reisman oddly omits the influence of biblical religion on the development of Western civilization, until recently known as Christendom, which in fact is the source of most of the values he claims to cherish.

What values?

And this is not the first instance of extolling sacrifice in the Judeo-Christian religions. Remember the story in Genesis of how God commanded Abraham to sacrifice his only son, which Abraham was quite prepared to do. God did this to test Abraham’s faith in him, and when it was tested, Isaac was replaced by a goat.[2]

And this is not the first instance of extolling sacrifice in the Judeo-Christian religions. Remember the story in Genesis of how God commanded Abraham to sacrifice his only son, which Abraham was quite prepared to do. God did this to test Abraham’s faith in him, and when it was tested, Isaac was replaced by a goat.[2]

Or take the Book of Job, where the Lord kills all of Job’s family in order to test Job’s faith in him. After a long and tedious discussion between the Lord and Job, Job finally concedes this act was totally just, and the Lord then gives him a brand new family. A consolation for Job, but not much of a consolation for the family killed. In this book in the Bible, God acts exactly like a mafia boss.

Back from pre-historic times to the present. The latest canonized saint, Mother Teresa, saw suffering as a great value – so great that she did not bother to offer dying patients any kind of palliative care, but let them suffer great pain, arguing that the pain meant that “Jesus was kissing them”[3]. To any decent person, this is an example of pure and unadulterated sadism; but by the Catholic Church – and by many others as well – Mother Teresa is hailed as a paragon of goodness.

What does it mean to say that man is born in sin and cannot escape his own sinfulness? Well, to be born is a sin; one of the first things a newborn child does is learn how to crawl, and then walk, and then run and jump. This must be sinful. And then a child learns to talk – at first in single words, then in two-word sentences, and eventually he masters his first language and moves on to learn one or more foreign languages. But all of this has to be sinful. A few children very early learn to play musical instruments, and some, like Mozart, start composing symphonies at a very early age. No matter how well such a child plays, and no matter how beautiful the symphonies, this is sinful, thus evil. But this is an idea that nobody could seriously maintain. Walking, talking, composing symphonies are all good things![5]

All this changes, when you accept Jesus as your savior. But it remains unclear in what way it changes. It should be noticed, in this connection, that Jesus died for our sins some 2 000 years ago. It is hard to give an exact measurement – but has there been less sinfulness and less evil in the world since that time?

And who stands to gain and who stands to lose, if we accept original sin? The sinner – the actual evil-doer – stands to gain, for whatever sin he commits, he can always claim that he is no worse than anyone else – and also, he just couldn’t help it, since he was born in sin.

The prophet Isaiah tells us:

If your sins prove to be like crimson, they will become white as snow; if they prove to be as red as crimson dye, they shall become as wool. (Isaiah, 1:18.)

But what happens, if our actions are already white as snow?

And of course John Galt – an upholder of Western values – refused to be born with original sin.

If we are born in sin and can only do sinful things, then of course it is an even greater sin to take pride in what we do, and Christianity thus teaches us to “eat humble pie”. But it may be illuminating to compare this to one of the Founding Fathers – perhaps the Founding father – of Western values: Aristotle. In The Nicomachean Ethics, he says that pride is “the crown of the virtues”. It is worth quoting him:

Now the proud man, since he deserves most, must be good in the highest degree; for the better man always deserves more, and the best man most. Therefore the truly proud man must be good. And greatness in every virtue would seem to be characteristic of a proud man. […] If we consider him point by point we shall see the utter absurdity of a proud man who is not good. Nor, again, would he be worthy of honour if he were bad; for honour is the prize of virtue, and it to the good that it is rendered. Pride, then, seems to be a sort of crown of the virtues; for it makes them greater, and it is not found without them. Therefore it is hard to be truly proud; for it is impossible without nobility and goodness of character. (The Nicomachean Ethics, book 4, chapter 3; translated by David Ross.)

And Ayn Rand, in Galt’s speech, calls pride (which she identifies as “moral ambitiousness”) “the sum of all values”.

When Christianity denigrates pride and elevates humility as a virtue, it merely tells us to blindly accept its doctrine of man’s depravity and of original sin.

But I tell you, love your enemies and pray for those who persecute you, that you may be children of your Father in heaven. He causes his sun to rise on the evil and the good, and sends rain on the righteous and the unrighteous. (Matthew 5:44–45.)

It is a metaphysical fact that the sun and the rain does not make a distinction between good people and evil people. But the Gospel attributes this to God and tells us to be like God and make no such distinction. And this shows that the Christian God is neither moral nor immoral: he is amoral.

If anyone comes to me and does not hate father and mother, wife and children, brothers and sisters—yes, even their own life—such a person cannot be my disciple. (Luke 14:26.)

Do not store up for yourselves treasures on earth, where moths and vermin destroy, and where thieves break in and steal. But store up for yourselves treasures in heaven, where moths and vermin do not destroy, and where thieves do not break in and steal. […] You cannot serve both God and money. (Matthew 6:19–20, 24.)

And what is the Christian view on time preference? Another quote from the same chapter:

Therefore I tell you, do not worry about your life, what you will eat or drink; or about your body, what you will wear. Is not life more than food, and the body more than clothes? Look at the birds of the air; they do not sow or reap or store away in barns, and yet your heavenly Father feeds them. Are you not much more valuable than they? Can any one of you by worrying add a single hour to your life?

And why do you worry about clothes? See how the flowers of the field grow. They do not labor or spin. Yet I tell you that not even Solomon in all his splendor was dressed like one of these. If that is how God clothes the grass of the field, which is here today and tomorrow is thrown into the fire, will he not much more clothe you—you of little faith? (Matthew 6:25–30.)

Saving and capital accumulation is definitely not recommended by the Gospel!

Some people will now object that the “Protestant work ethic” is the basis of capitalism.[6] But what is the reasoning here? Jean Calvin taught that every man is predestined both for success or failure in this life and for eternal salvation or condemnation. So the followers of Calvin worked hard just to show to themselves and to others that they are predestined for success and salvation rather than failure and condemnation. All I can say about this reasoning is that it is odd.

Most Christians, I believe, at least implicitly uphold free will, since it would be senseless to reward the good and punish the evil by eternal salvation and eternal condemnation, if man just can’t help what he does. But Jean Calvin could hardly have believed in free will, if he says that our eternal fate is predestined. And Martin Luther quite adamantly opposed the idea in a tract called On the Bondage of the Will.[7] He argues that free will is against what the Bible teaches, and he says that if man had free will, it could only mean the will to do evil:

In Romans 1:18, Paul teaches that all men without exception deserve to be punished by God: “The wrath of God is revealed from heaven against all ungodliness and unrighteousness of men, who hold the truth in unrighteousness.” If all men have “free will” and yet all without exception are under God’s wrath, then it follows that “free will” leads them in only one direction—“ungodliness and unrighteousness” (i.e., wickedness). So where is the power of “free will” helping them to do good? If “free will” exists, it does not seem to be able to help men to salvation because it still leaves them under the wrath of God.

And:

This universal slavery to sin includes those who appear to be the best and most upright. No matter how much goodness men may naturally achieve, this is not the same thing as the knowledge of God. The most excellent thing about men is their reason and their will, but it has to be acknowledged that this noblest part is corrupt.

And:

Now, “free will” certainly has no heavenly origin. It is of the earth, and there is no other possibility. This can only mean, therefore, that “free will” has nothing to do with heavenly things. It can only be concerned with earthly things.

Well, since Objectivism rejects the supernatural, free will in Objectivism “can only be concerned with earthly things”. But that was an aside.

Martin Luther claimed that we cannot reach salvation bay “doing good” but only by faith. But can we even choose to believe in God and in Jesus having absolved us from our sins? Oh, no. that, too, is determined by God:

Every time people are converted, it is because God has come to them and overcome their ignorance by showing the Gospel to them. Without this, they could never save themselves.

And:

Grace is freely given to the undeserving and unworthy, and is not gained by any of the efforts that even the best and most upright of men try to make.

Luther claims to have Scripture on his side: He notes that when people have done bad things, it is not because they have chosen to, but because God “has hardened their hearts”. So, if we do good, it is God who has made us do good, and if we do evil, it is God who has made us do evil.

Reason is the devil’s highest whore.[8]

$ $ $

Religion has been a dominating force in man’s life since time immemorial; and the Western world has undoubtedly been dominated by Christianity. So to say that Christianity is part and parcel of our Western civilization and heritage is just a platitude. But are the things I have listed above Western values? Are they even civilized?

Ayn Rand said that the saving grace of Christianity is that it preaches the sanctity of the individual soul:

There is a great, basic contradiction in the teachings of Jesus. Jesus was one of the first great teachers to proclaim the basic principle of individualism — the inviolate sanctity of man’s soul, and the salvation of one’s soul as one’s first concern and highest goal; this means — one’s ego and the integrity of one’s ego. But when it came to the next question, a code of ethics to observe for the salvation of one’s soul — (this means: what must one do in actual practice in order to save one’s soul?) — Jesus (or perhaps His interpreters) gave men a code of altruism, that is, a code which told them that in order to save one’s soul, one must love or help or live for others. This means, the subordination of one’s soul (or ego) to the wishes, desires or needs of others, which means the subordination of one’s soul to the souls of others.

This is a contradiction that cannot be resolved. This is why men have never succeeded in applying Christianity in practice, while they have preached it in theory for two thousand years. The reason of their failure was not men’s natural depravity or hypocrisy, which is the superficial (and vicious) explanation usually given. The reason is that a contradiction cannot be made to work. That is why the history of Christianity has been a continuous civil war — both literally (between sects and nations), and spiritually (within each man’s soul). (Letters of Ayn Rand, p. 287.)

You may object that Christianity has also accomplished some great things. A couple of great philosophers – philosophers acknowledged by Objectivists to be great – were Christians (Thomas Aquinas and, to some extent, John Locke).[9] And Isaac Newton, arguably the greatest scientist of all time, was a Christian.[10]

Speaking personally, I enjoy churches and cathedrals. And certainly some Christians have composed some great music. What famous classical composer has not composed a religious oratory or a mass? And the greatest of them all, Johann Sebastian Bach, has been called “the fifth evangelist”.

But this does not really change my point. For example, are cathedrals humble? Or are they intended to make us feel humble, when we enter them? And where is the humility in Bach’s music?

It all boils down to this question: Is our Western civilization what it is because of Christianity or despite Christianity? If you answer “because of”, beware of the implications!

[1] For Scandinavian speaking readers, this essay is also available in a Swedish translation.

[2] For a discussion of this, see Søren Kierkegaard’s Fear and Trembling (or Frygt og Bæven in the Danish original).

[3] She told this to a patient, and the patient answered: “Then I want him to stop”.

[4] There is a story about Frank O’Connor (Ayn Rand’s husband) that his parents sent him to a Catholic school; but when they tried to teach him that babies are born in sin, he left and went to a common, non-religious school instead.

[5] I exclude some composers, like Henryk Gôrecki, Arvo Pärt and all the minimalists. But you may listen to them, if you want to torture your eardrums.

[6] This idea was launched by Max Weber. But I guess you already knew that.

[7] De servo arbitrio in the original Latin. It was an answer to Erasmus of Rotterdam’s De libero arbitrio or On the Freedom of the Will. My quotes are from a section that has been published separately on the web.

[8] ”Vernunft ist des Teufels höchste Hure” in German. I call this rejection of reason “the fallacy of the stolen faculty“. Everyone has to use his reason even to put a simple sentence together, and Martin Luther did much more: apart from all the tracts he wrote, he translated to whole Bible into German and is credited with being the creator of modern German. How could he do this without “whoring with the devil”?

[9] Bad philosophers, according to Objectivism, include St. Augustine, Descartes and Immanuel Kant. Augustine and Kant certainly championed (if that is the right word) original sin and man’s innate depravity.

[10] He was an anti-Trinitarian, i.e. he opposed the doctrine of the Trinity. He is also reported to have said that space and time are the thoughts of God.

25 September, 2016

The other day I found the following in a debate on Facebook:

Forming new concepts requires the active, volitional use of measurement-omission (remember that forming concepts is literally a volitional, not automatic process).

This was part of an answer to the following paragraph from David Kelley’s book The Contested Legacy of Ayn Rand: Truth and Toleration in Objectivism:

If someone claimed to have evidence against the law of non- contradiction, we could be sure in advance that the evidence is mistaken. If that law is not an absolute, then there is no such thing as evidence, truth, or facts. One cannot claim to know that a principle presupposed by any possible knowledge is false. Suppose, by contrast, that we found certain concepts to which the theory of measurement-omission seemed inapplicable. Here we could not take the same approach. Because the theory explains so much, we would not give it up lightly. We would first try to show that the evidence is mistaken. But we could not be certain of this in advance, as we were with the law of non-contradiction. As an inductive hypothesis about the functioning of a natural object—the human mind— the theory of measurement-omission is open to the possibility of revision in the same way that Newton’s theory of gravity was. And the same is true for the other principles of Objectivism. [My italics.]

And here is the full rejoinder:

I’d like to ask him how on earth would he “find” (pay attention to his wording, he doesn’t say “form”, he says “find”) any concept to which measurement-omission “doesn’t apply”.

Does he think that one learns about measurement-omission and goes about in life trying to “see how it fits” with already formed concepts? As if it was some hypothetical prediction that for confirmation requires us to go around and try to make it “fit in” with concepts out there in nature??

If that’s what he thinks, he’s utterly wrong. Forming new concepts requires the active, volitional use of measurement-omission (remember that forming concepts is literally a volitional, not automatic process) . We might even say that it’s presupposed by all subsequent forming (not finding) of concepts, just as he says it’s not.

Now, let me see if I can get heads or tails of this controversy.

The picture I get is David Kelley – or anybody who has read Introduction to Objectivist Epistemology – facing a bunch of scattered concepts. He picks one of them up and says: “This concept must have been formed by measurement omission.” He does the same with a second and a third and a forth and an n-th concept and says the same. But since every language contains literally millions of concepts (or words denoting concepts), it is hard to be sure that one will not encounter some concept that is formed by another method than measurement omission.

The upshot of this is that David Kelley does not know how to form concepts, since he has never formed one himself. He merely investigates concepts formed by others. With regard to concept formation, he is an abject second-hander. Ayn Rand had to tell him how concepts are formed.

But aren’t we all in the same predicament as Kelley here? None of us knew about measurement omission until we read ITOE. (If you did know, raise your hand and go to the head of the class.)

Speaking for myself. I have no slightest recollection of how I formed my first concepts as a young child. This may be because I, like David Kelley, is an abject second-hander with regard to concept formation, but somehow, I doubt it. (Again, raise your hand if you aren’t, and go to the head of the class.) Nevertheless, I managed to become quite proficient in Swedish (and fairly proficient in English). I learned and came to use one concept after another without giving a single thought to the measurements I omitted; and I did it quite effortlessly.

Now recall the first quote I gave:

Forming new concepts requires the active, volitional use of measurement-omission (remember that forming concepts is literally a volitional, not automatic process). [Emphasis added.]

How on earth did I learn to speak and write, if I did not actively and volitionally omit measurements? Yet, this very text proves that I did learn to speak and write.

One striking feature of man’s language development is the immense speed with which a child learns his first language – and also, how fast it moves from one level of abstraction to the next. Just one example:

Very young children do not use pronouns like “I” – they refer to themselves by their given name. But this is a very short transitional stage. And if you study children, you can certainly find more examples of this. (For example, using Ayn Rand’s own example, how long does it take for a child to move from the first level concepts “table”, “chair”, “bed”, etcetera, to the second level concept “furniture”?)

Learning a second language later in life (or a third or an umpty-first) takes more of a conscious, volitional effort. It takes more time. Some people do it with greater ease than others, but no one does it as easily as they learn their first language. Again, taking myself as an example, I took English for eight years in school; but those eight years did not make me master the language. If I master it now, it is because I have read many books in English, I have lived among English speaking people, I have written quite a lot in English, and I have made translations from English into Swedish. Now I know English well enough to see the shades of difference between English and Swedish.[1]

(I also took German, French, Latin and ancient Greek in school, and later I learned a smattering of Spanish. But I certainly do not master those languages. It is a matter of actually using the languages.)[2]

But back to measurement omission.

That concepts are formed by some characteristics being retained and others omitted is not new with Ayn Rand – what is new is that it is specifically measurements that are omitted. The “pre-Randian” idea is that the essential characteristics are retained and the non-essential or accidental ones are omitted. “Essential” here means those characteristics that make a thing what it is and separates it from all other things.

Take for example the concept “coffee”[3]. What are the essential characteristics of coffee? Well, its color – black or dark brown –, its taste – which distinguishes it from tea, milk, sugar, etcetera –, and the fact that you have to make it by pouring water, preferably boiling water.[4] What is omitted are such things as whether the beans were grown in Brazil or some other country (on the principle that they have to be grown somewhere but may be grown anywhere, within certain climatological limits). We also omit that some people take it straight, while others add sugar, milk or cream: it is still coffee, although the color may change. But the only measurement omitted is whether it is strong, weak, or something in-between.

But on Ayn Rand’s theory, only the strongness/weakness of the coffee would be significant. Or?

Let us see how Ayn Rand derives her theory:

Let us now examine the process of forming the simplest concept, the concept of a single attribute (chronologically, this is not the first concept that a child would grasp, but it is the simplest one epistemologically) – for instance, the concept “length”. If a child considers a match, a pencil and a stick, he observes that length is the attribute they have in common, but their specific lengths differ. The difference is one of measurement. In order to form the concept “length”, the child’s mind retains the attribute and omits its particular measurements. Or, more precisely, if the process were identified in words, it would consist of the following: “Length must exist in some quantity, but may exist in any quantity. I shall identify as ‘length’ that attribute of any existent possessing it which can be quantitatively related to a unit of length, without specifying the quantity.”

But no child goes through this rigmarole – certainly not with every new concept it forms or encounters. Ayn Rand, of course, is aware of this, so she continues:

The child does not think in such words (he has, as yet, no knowledge of words), but that is the nature of the process which his mind performs wordlessly. And that is the principle which his mind follows, when, having grasped the concept “length” by observing the three objects, he uses it to identify the attribute of length in a piece of string, a ribbon, a belt, a corridor or a street. (ITOE, p. 11 in the expanded second edition.)

Fair enough. But how could this wordless process (which I think would take place in a split second[5]) be an active, volitional process, requiring some conscious effort – as my first quote suggests?

Chronologically, this is not the first concept a child learns (or forms, or grasps). Children learn the names (or forms or grasps the concepts) of entities first. And I think a child would learn (form, grasp) the concepts “long” and “short” before the slightly more abstract “length”.[6]

But one thing should be noted: “length” is itself a measurement concept. So of course measurements are omitted when it is formed. What else is there to omit?

But Ayn Rand’s theory is that this applies to all concepts. Her next example, with which you are certainly familiar, is the concept “table”. This is formed by noticing its shape: “a flat, level surface and support(s)”. But is “shape” a measurement? Well, one could say that a common rectangular table has four side and four corners, a triangular table has three sides and three corners, and a circular or oval table has only one side and no corners at all. And most tables have four legs or supports, but they may actually have any number of legs/supports without ceasing being tables. Tables are also distinguished from other objects by their function: “to support other, smaller objects”, but it does not matter what number of other objects.

There are countless concepts to which measurement omission certainly applies. Take emotions: the concept “anger” covers everything from mild irritation to complete rage; the concept “fear” everything from mild nervousness to dreadful anxiety, etcetera. Or take thought processes: one may think hard about a subject or barely give it a thought. Love and hatred may be more or less intense; friendships more or less close; and you may think of more examples (many, or just a few).

Or take social (or political) systems: capitalism is characterized by private property, socialism by public property. But since, in today’s world, we have neither, but mixed economies of various mixtures, there is a graduated scale from “pure capitalism” to “pure socialism”, and we speak of more or less capitalism, more or less socialism.

Now some cases that at first glance appear to be hard:

“Here” and “now”, “there” and “then” are concepts that nobody has the slightest difficulty understanding.[7] But those are either–or concepts: an event happens here and now, or it happens there and then; there is no third possibility. So unless you count “one” and “zero” (or “yes” and “no”) as a measurement, there seems to be no measurements omitted or retained.

Concepts are often compared to file folders. Ayn Rand herself writes:

Concepts represent a system of mental filing and cross-filing, so complex that the largest electronic computer is a child’s toy by comparison. (ITOE, p. 69.)

The idea is that once you encounter (for example) horses, you make a file folder marked “horse” (or “häst”, “Pferd”, “cheval”, etc., depending on your native language). All the information you will ever acquire about horses then gets stuffed into this folder. If you are a hippologist, or work professionally with horses, the folder will be quite voluminous; but – since the folder is mental – there are no physical limitations to be considered. Everything that has ever been known, or will ever be known, about horses will fit into the folder. And the folder, or concept, itself will remain the same.

Now you encounter mules, so a new folder will be created. But, since quite a lot of what we know about horses and donkeys will also apply to mules, information will be copied from their folders and stuffed into the “mule” folder. And now you encounter centaurs (highly unlikely in real life, but they exist in mythology): you will copy information from the “horse” folder and the “man” folder and stuff it into this new folder.

Neither, since the folders are mental, does it pose any problem to stuff the folders into larger folders, such as “mammal” or “animal” or “organism” or “entity”.

And an orderly filing system means an orderly mind; a filing system in disarray means a mind in disarray.

But what about the folders marked “here” and “now”? Everything that happens at some point happens here and now, so those folders would literally contain everything. Or else, those folders would be immediately emptied and all their content moved over to the opposite folders, those marked “there” and “then” – and then, those folders would literally contain everything.

But having given it some further thought (and after a good night’s sleep), I came up with the following:

When I say “here”, I can mean: here, in front of my computer (as opposed to the bedroom, the living room, the kitchen or the bathroom); or here, in my apartment (as opposed to the street outside), or here in town (as opposed to out of town), or here in Sweden (as opposed to all other countries) or here on earth. Or even here in the Solar System, here in the Milky Way, here in the universe. (Only in this last case, there is no “there” to oppose it, since there is nothing outside the universe.)

Similarly with “now”. I could mean now, this moment, or now, today, this week, this year, this century.[8]

Another hard case I thought about is prepositions. Expressions like “the cup is on the table” or “I am sitting in the room” appear to be either–or propositions: either the cup is on the table, or it is not. But what is omitted here is where on the table the cup is situated, and where in the room I am sitting. It has to be somewhere, but it may be anywhere. “To” and “from” have to be to or from somewhere, but may be to or from anywhere. “Above” and “below” do not specify the distance, but it has to be some distance. (And you can go through the rest of the prepositions yourself.)

Another hard case it interjections. What measurements do we omit, when we say “ouch!” or “hooray!” or greet someone with a “hello”. I really don’t know. But Ayn Rand states:

Every word we use (with the exception of proper names) is a symbol that denotes a concept, i.e. that stands for an unlimited number of concretes of a certain kind. (ITOE, p. 10.)

Every word, mind you. And interjections are not proper names!

Concepts perform the function of condensing information. So it may be said that “ouch!” condenses the information “it hurts”, “hooray!” condenses “I have achieved a value and fell happy about it”, and that “hello” condenses “I have recognized you and want to communicate this fact to you”. But where are the measurements omitted? Or are we to call it a measurement omitted that we have to say “hello” to some person, but may say it to any person?

And what about conjunctions – words that join clauses together in a sentence? I see no measurement in the word (or concept) “that”; all the measurements are in the clauses joined together. And what about the infinitive mark – “to” in English? It merely serves to indicate that the verb that follows is in the infinitive form. There is no “more or less” involved here. And what about the definite and indefinite articles?[9]

Verbs (which denote concepts of actions/motions or states) do involve measurements omitted – for example, “walk”, “run”, “swim”, “fly”, which do not specify the speed; or “sit”, “stand”, “lie”, which do not specify the length of time. But what about auxiliary verbs – such as “do” in this very paragraph[10], or “have” in “I have said it before”, or “is” in “he is running” – which perform only a grammatical function?

And do those words – that have a merely grammatical function and have no meaning outside their grammatical context – stand for concepts? Well, Ayn Rand said that every word (except proper names) stands for a concept. But – as Craig Biddle has pointed out – “Ayn Rand said” is not an argument.

The upshot of all this is that “measurement omission” is virtually self-evident with a concept like “length” (or “width” or “weight”), which is already in itself a measurement concept. But it becomes harder and harder with other concepts, and with some concepts it is virtually impossible.

And finally: If measurement omission is “active and volitional”, then what about all those millennia that have passed from pre-historic times, when the first man formed the first concept, up to 1966–1967, when ITOE was first published? Everybody who has formed (or grasped or learned) a concept would simply know what had been going on – so why did Ayn Rand have to write a book about it? It would be like writing a treatise on how children learn to walk – interesting, but it would add very little to our knowledge.

Ayn Rand was not the first one to write about concepts, but she was the first one to give serious attention to the formation of concepts. At least, to my knowledge.[11]

$ $ $

More on concept formation in What comes First, the Concept or the Word? Or search the tag concept formation. Scandinavian speaking readers may also read Vad ska vi med begrepp till? (i.e. What are Concepts For?).

[1] One such difference is that we do not use the expression “make heads or tails of”; we use expressions such as “make some sense of”. For other examples, see my blog post on the subject.

[2] There are some people – comparatively very few – who speak around thirty or more languages fluently. One of them was HS Nyberg, who was a professor of Semitic languages at the University of Uppsala. He was reportedly speaking 28 different languages – until somebody reported that he also spoke Yiddish to his barber. Another one was Ferdinand de Saussure, the famous linguist. A third one was another Swedish linguist, Björn Collinder, who was a professor of Finno-Ugric languages. And I once met a person, who is not famous and whose name I have forgotten, who told me that if he spent two weeks in a foreign country, he managed to learn the language. To me, who can only master two languages, this sounds like magic. But there has to be an explanation of the phenomenon, although I don’t know it.

[3] I thought of this when I poured my first mug of coffee this morning (or early afternoon, rather). If you drink tea, it would not change much.

[4] I have never tried making coffee by pouring cold or lukewarm water; but something tells me it is not advisable.

[5] I assume it is instantaneous or almost instantaneous, because if a child goes through this procedure with every new concept he encounters, he would not have the time to learn very many concepts, and language development would be very slow, which it certainly is not.

[6] I think this can be verified by closely studying the language development of children.

[7] An exception is St. Augustine, who famously claimed that as long as he does not think about time, he understands it, but as soon as he starts thinking about it, or explaining it, he has no clue. (Book 11 in Confessions.)

[8] St. Augustine, by the way, got into his trouble with time by only considering the fleeting moment as “now” – a “now” that immediately passes into the past..

[9] Some languages, like Latin, do not even have those parts of speech. “To be” in Latin is just “esse”, and Latin makes no distinction between “a house” and “the house”. Ancient Greek at least has a definite article. But the modern languages with which I am familiar do have them.

[10] The “do-construction”, by the way, does not exist in other languages than English. The “have-construction”, on the other hand, is common to many languages. Latin and ancient Greek don’t have them, but use inflections instead.

[11] Plato had the idea that our concepts are recollections of a former existence in the “world of forms”. Aristotle, I believe, was the father of the distinction between “essential” and “accidental” characteristics. The medieval scholastics did write about concepts, and so did John Locke. Immanuel Kant merely pressed all concepts into his scheme of twelve categories. But no philosopher before Ayn Rand, as far as I know, addressed the issue of how concepts are actually formed.

7 September, 2016

Facebook note from September 2010.

What is the central concept of ethics? There are two answers to this question: Immanuel Kant makes “duty” the central concept; Ayn Rand makes “value” the central concept. This, in a nutshell, explains why Objectivists cannot stand Kant; it also explains why so many people cannot stand Ayn Rand; they are simply too steeped in a deontological view of ethics (and Kant was not the only deontologist in the history of philosophy).

How do Rand and Kant arrive at those widely diverging fundamental concepts? I do not know about Kant – he seems to have simply taken it for granted – but Ayn Rand tells us. I won’t repeat her derivation of “value” from “life”, because you are already familiar with it. But she also has something to say about the formation of the concept “value” in a child:

Now, in what manner does a human being discover the concept of “value”? By what means does he first become aware of the issue of “good or evil” in its simplest form? By means of the physical sensations of pleasure or pain. Just as sensations are the first step of the development of a human consciousness in the realm of cognition, so they are the first step in the realm of evaluation. (From “The Objectivist Ethics” in The Virtue of Selfishness.)

Does this sound like hedonism? Well, we know Ayn Rand was “profoundly opposed to the philosophy of hedonism”. And we know why: pleasure could not possibly be a standard of value. Neither could happiness: one’s own happiness is the proper goal of morality, but it is not the standard.

But “life as the standard” is too abstract for a small child to grasp. For one thing, the child does not yet know about death, and so cannot grasp the fundamental alternative of “life or death”. It only knows “pleasure or pain” and can then proceed to the slightly more abstract “happiness or suffering”.

An implication of this is that a child starts out as a hedonist: “pleasure” is the implicit standard. As his knowledge grows, he becomes an eudaemonist: “happiness” becomes the implicit standard. And finally, when he grasps that it all has its roots in the alternative of “life or death”, he becomes an Objectivist. (But he probably would have to read Ayn Rand to arrive at this stage.) And from this it would also seem that hedonism is closer to the truth than a deontological ethics.

How would a child form a deontological or “duty-centered” ethics?

What is “duty”? Essentially it is obedience to some authority. For a small child, the authority would be his parents, so it is his duty to obey them. Later come the duty to one’s country, or to God, or whatever. (Immanuel Kant would object to this and say it is a matter of obeying one’s own conscience – but to untangle this, I would have to write an essay on Kant’s distinction between the “noumenal” and the “phenomenal” self.)

Now, children do obey their parents (and later their school teachers) to a large extent. And so long as parents and teachers are rational, I see no harm in this. To use a phrase from Cesar Millan (“the dog whisperer”), children, as well as dogs, need “rules, boundaries and limitations”. The child will discover on his own the reasons for those rules, boundaries and limitations; and he will object to them only if and when he finds something wrong with them. (Of course, this last point is not applicable to dogs.) (And it goes without saying that the matter is very different, if or when parents and teachers are irrational.)

The reason I started thinking about this is that somebody recommended that I read Jean Piaget. I have read one of his essays, though unfortunately in a Swedish translation, so I cannot give any quotes. But the point is that Piaget writes that “pleasures” and “duties” sometimes conflict; and if I understand him correctly, he thinks that “duty” takes precedence over “pleasure”; it is a sign of maturity in a child when he subordinates a temporary pleasure to some duty. This is hardly the Objectivist view…

Piaget spent most of his life studying the cognitive development of children and adolescents and developed an extensive and rather complex theory about it. It was based, as all good theories should, on observation. This “pleasure/duty” clash is one such observation. But it is hard to reconcile with Ayn Rand’s view. But she might answer that it is actually a clash between “short-term pleasure” and “long-term happiness”. An example of this is that it may be painful to go to the dentist; but we do it anyway, since not doing it will impede our future happiness.

(Added 2016.)

In the period preceding Kant, it was customary among rights philosophers to distinguish between three kinds of duties: duties to God, to society (or one’s fellow human beings) and to oneself.[1] Immanuel Kant, to the best of my knowledge, makes no such distinction.

Kant famously argued that man has a duty to preserve his life, even (and especially) when life has become so painful as to be unbearable. Is this a duty to God? Why should God even care, unless he were a sadist? Is it a duty to one’s fellow men? But why would they want you to suffer? Is it a duty to oneself? Hardly. No, it is just duty for the sake of duty, with no visible beneficiary.

$ $ $

A famous Objectivist once said in a courtroom speech:

Man’s first duty is to himself.

He obviously had not read Ayn Rand’s essay “Causality versus Duty”, where she dismisses the very concept of duty.[2]

$ $ $

Aristotle’s ethics if of course value-centered, in that it has a specific aim: the achievement of ευδαιμονια, i.e. happiness or flourishing. There is no talk in Aristotle about obedience to some authority, whether outer or inner. The same of course is true about any form of eudaemonism or hedonism.

$ $ $

Once a long time ago I saw the objection to Objectivism, from an academic philosopher, that there is no duty to act egoistically, just as there is no duty to act altruistically. Abysmal ignorance about Ayn Rand’s philosophy.

Or are we to assume that there is a duty to pursue values? That we should pursue them because some outer or inner authority has commanded us to pursue them?

[1] See for example Samuel Pufendorf, De officio hominis et civis juxta legem naturalem (or On the Duty of Man and Citizen According to Natural Law in English), published in 1673.

[2] I am referring of course to Howard Roark. Don’t take the words “famous Objectivist” too seriously!

23 August, 2016

Well, Ludwig von Mises thought so. I quote from Human Action:

Well, Ludwig von Mises thought so. I quote from Human Action:

Human action is necessarily always rational. The term “rational action” is pleonastic and must be rejected as such (P. 21.).

In view of all the irrationality we observe around us, this statement sounds … well, not exactly rational. “Weird” or even “insane” would be good descriptive terms.

So what is so immensely rational about all human action that all human action is to be labeled rational? Well, all human action is about relating means to ends. Mises has some examples:

The very existence of ascetics and of men who renounce material gains for the sake of clinging to their convictions and of preserving their dignity and self-respect is evidence that the striving after more tangible amenities is not inevitable but rather the result of a choice. Of course, the immense majority prefer life to death and wealth to poverty. (P. 20.)

But nothing could be said against those who make the opposite choice and prefer death to life and poverty to wealth. They have a different end from the immense majority and choose means accordingly.

The doctors who a hundred years ago employed certain methods for the treatment of cancer whish our contemporary doctors reject were – from the point of view of present-day pathology – badly instructed and therefore inefficient. But they did not act irrationally; they did their best. (P. 20.)

In other words: people may be wrong in their choice of means; but being wrong is not the same as being irrational.

The opposite of action is not irrational behavior, but a reactive response to stimuli on the part of the bodily organs and instincts which cannot be controlled by the volition of the person concerned. (P. 20.)

So when a man acts “irrationally”, he actually does not act at all; he merely reacts, just the way animals do.

Conspicuously absent here is any attempt to analyze criminal behavior. But a criminal also relates means to ends. A bank robber has to use reason to plan and carry out his robbery – it is certainly not just a bodily reaction. Someone who wants to get rid of his rich grand-uncle in order to inherit his money has to carefully plan and perform the murder, and in a way that minimizes the risk of discovery. (He should, for example, abstain from the attempt if there is an Hercule Poirot or a Jane Marple in the vicinity.) According to Mises, he is as rational as anyone else. Only murders committed at the spur of the moment in a drunken brawl would classify as irrational, since the murderer then does not have time to consider his means or his ends.

So what makes Mises make such a statement and seriously mean it? It should come as no surprise that it is because of his idea that ultimate ends fall outside the realm of reason. Continuing the first quote above:

When applied to the ultimate ends of action, the terms rational and irrational are inappropriate and meaningless. The ultimate end of action is always the satisfaction of some desires of the acting man. Since nobody is in a position to substitute his own value judgments for those of the acting individual, it is vain to pass judgment on other people’s aims and volitions. No man is qualified to declare what would make another man happier or less discontented. (P. 20.)

So we should not pass judgment on the bank robber or grand-uncle murderer mentioned above. Who are we to substitute our own value judgments for theirs? But it is even worse::

The critic either tells us what he would aim at if he were in the place of his fellow; or, in dictatorial arrogance blithely disposing of his fellow’s will and aspirations, declares what condition of this other man would better suit himself, the critic. (P. 20; italics mine.)

Passing judgment on the bank robber or the murderer would be dictatorial arrogance!

Of course, Mises did not mean this – he forgot to think about criminal action – but this is still what he says!

What, then, is the ultimate end of the bank robber/murderer? Is it – like for any honest worker or millionaire – to make money or to earn his living? Is it just the means that are somehow inappropriate? But the bank robber did not make the money – it was made by the persons who had deposited their money in the bank. The murdered grand-uncle, not his dishonest heir, made his money (provided he earned it honestly).[1] And, as for “earning a living”, this shows some confusion about the meaning of “earn”.

Or is it, more broadly, the pursuit of happiness? Well, most bank robbers (and murderers) get caught, and those who don’t have to live in constant fear of getting caught. It could only be called “pursuit of happiness”, if someone preferred living in jail than outside – or living in fear rather than in safety.

And what about suicide bombers? Those, too, are conspicuously absent in Mises’ reasoning – probably because he had no experience of them and could not even imagine this kind of evil. Otherwise, the existence of suicide bombers is as much proof as the existence of ascetics that some people do no not prefer life to death. And the suicide bomber can hardly be said to pursue happiness, at least not here, on earth. He would have to take the promise of paradise in the hereafter quite seriously. – But given this end – life and well-being when you are already dead – they, too, relate means to ends. But they are badly mistaken, both about the end and the means![2]

Ayn Rand made some harsh remarks in the margin of Human Action, of which I will quote just one:

Nobody can get anywhere with such a terminology! (Ayn Rand’s Merginalia, ed. by Robert Mayhew, p. 110.)

Objectivism, as you all know, holds the preservation and enhancement of life as the ultimate end and claims that this can be objectively proven. (I will not attempt to present the proof, since both “Galt’s speech” and “The Objectivist Ethics” are available for anyone to read.) This does not mean that everyone automatically agrees about this end, merely that everyone should agree. Mises claims that the majority does agree, but that is not the same thing – it leaves the possibility open that the majority is wrong.

Saying that the ultimate end is “beyond reason” and can neither be proved or disproved makes it impossible to go anywhere!

Much as I admire Ludwig von Mises, on this issue he was dead wrong.

[1] Off topic, but worth mentioning: If you want to equivocate, you might claim that a counterfeiter “makes money”, but that money is just that: counterfeit. The same goes for the inflation money that governments and central banks pour on us and only makes us poorer. The only ones that could be said to “make money” in this sense are those who mine and mint the precious metals.

[2] I refer you to this article in The Onion.

29 June, 2016

In my essay Objectivism versus “Austrian” Economics on Value (in the second preamble) I made the point that there are non-economic values as well as economic ones. For example, family and friends are values, but no one would dream about putting them up for sale in the market. A pet is a value, but it has market value only when you first buy it; if for some reason – illness or whatever – you can no longer take care of your pet and you do not want to put it down, you have no choice but giving it away for free. I also made a comparison between Human Action and Das Kapital, which may cost approximately the same in the bookstore but are of totally different value – the first one you buy to learn something about economics, the second one just to study the enemy. But I wrote nothing about esthetic values, so let me elaborate a little on them.

Esthetic products do have a market value, since people are willing to spend money on them: they buy novels or poetry collections, paintings or reproductions, gramophone records or CDs, theater or concert tickets, etc. But is the value of a novel, a painting or a musical composition really equivalent to its market value or price?

Well, take an obvious example: The value to me of Ayn Rand’s novels has nothing whatsoever to do with how many copies of them have been sold or to what price. I would not think less of them, if there were only one copy in existence and I were the only one to have read it. (This is of course an impossible scenario, but I think you get my point.) And for those of you who do not care for Ayn Rand’s novels but prefer Ulysses and Finnegan’s Wake (or whatever), the point would be the same.

The same holds true for music. To me, the music of Johann Sebastian Bach (or any of the great classical composers) is far more valuable than, say, modern rap or hip-hop. Yet, the price of the records is approximately the same, and for number of records sold, the moderns might out-number the classics.

Sometimes the discrepancy is dramatic. I do not know whether Vincent van Gogh sold any of his paintings while alive, but if he did, it must have been for pocket money. Today, one will have to pay millions to acquire a van Gogh. (Fortunately, there are good reproductions.)

And this is just a particularly dramatic example. It is also true of old masters whose greatness was discovered in their own life times. For example, I am sure that Leonardo da Vinci was paid for painting Mona Lisa; but how much do you think art collectors would pay for it today, if it were ever put up for sale at an auction?

I cannot claim originality for those observations. In her essay “What is Capitalism?” in Capitalism: The Unknown Ideal, Ayn Rand makes the distinction between “philosophically objective value” – i.e. “value estimated from the standpoint of the best possible to man” – and “socially objective value” – where the last stands for market value. She was too modest to use her own novels as examples, so she wrote:

For instance, it can be rationally proved […] that the works of Victor Hugo are objectively of immeasurably greater value than true-confession magazines.[1] But if a given man’s intellectual potential can barely manage to enjoy true confessions, there is no reason why his meager earnings, the product of his effort, should be spent on books he cannot read […] (P. 24 in the paperback edition.)

Neither is there any reason why a person who can only enjoy rap and hip-hop – or the European song contest – should pay for my enjoyment of the Brandenburg Concertos or Schubert’s Die schöne Müllerin.

And in fact it is a good and valuable thing that this “discrepancy” exists. If Atlas Shrugged were priced according to its “philosophically objective value”[2], who could afford to buy it? And if only millionaires could afford to buy it, how could the man in the street discover its actual, non-commercial, value?

[1] I don’t dispute this; but I would like to see the rational proof.

[2] Assuming this could be estimated in monetary terms.

27 March, 2016

Recently, Anoop Verma wrote a blog post, Ayn Rand’s Copernican Revolution in Philosophy, and then privately asked for my feedback. I have very little to criticize in his post; but it gives me an opportunity to present my own view.

Recently, Anoop Verma wrote a blog post, Ayn Rand’s Copernican Revolution in Philosophy, and then privately asked for my feedback. I have very little to criticize in his post; but it gives me an opportunity to present my own view.

In my view, the most fundamental thing about Ayn Rand’s philosophy is the insight that all knowledge is the result of the interaction between existence and consciousness, between the external world and our minds.

Sensation and perception are the result of the interaction between the external world and our senses. Concept formation are the result of our identification of the facts of reality. And values are a matter of relating what we value (or disvalue) to our life and well-being. (Politics is about applying ethics to our social life.)

This is even true about esthetics; her definition of art is

a selective re-creation of reality according to an artist’s metaphysical value-judgements. (“Art and Cognition” in The Romantic Manifesto.)

A re-creation of reality, not of something outside of reality.

Obviously, this is not an exhaustive presentation of her philosophy, but it is something that has always struck me. With the partial exception of Aristotle (and his followers), I don’t think other philosophers have even come close to this insight. Platonism, for example, is clearly about our “interaction” with a mystical realm; and Kant’s philosophy is a variation on this theme.

In ethics, there are basically two views:

That they are a matter of what we feel is right or wrong, and that those feelings are not connected to reality – that there is an unbridgeable gulf between “is” and “ought” and all that kind of jazz.[1]

And in politics the idea that we have to choose between tyranny (totalitarianism) and anarchy.

The philosophy of Ayn Rand is commonly contrasted with that of Immanuel Kant; Ayn Rand herself did:

The philosophy of Ayn Rand is commonly contrasted with that of Immanuel Kant; Ayn Rand herself did:

On every fundamental issue, Kant’s philosophy is the exact opposite of Objectivism. (“Brief Summary”, The Objectivist, September 1971.)

She also wrote:

[Kant’s] argument, in essence, ran as follows: […] man is blind, because he has eyes – deaf, because he has ears – deluded, because he has a mind – and the things he perceives do not exist, because he perceives them. (The title essay in For the New Intellectual.)

It is often forgotten (or so it seems to me) that those are not Immanuel Kant’s own words; they are Ayn Rand’s summary of his epistemology. Objectivists take this description ad notam; they do not bother to read Kant and verify it.[2]

It is a common misunderstanding that Kant denied the evidence of the senses; in fact, he defended the senses, and he even gave proper arguments.[3]

Kant makes three points about the senses:

He does however say that what the senses provide us with is “appearances”, not true (“noumenal”) reality.

Also, Kant did believe in the existence of an external world, although he claimed that it is inaccessible to us. His argument ran as follows: We live in a world of appearances; but there cannot be appearances without things that appear; thus those things must exist somewhere and somehow; but we can never know where or how.

But Kant would not be Kant, if he thought this was all there is to it. Although the senses give us valid information, he thought that space and time do not come to us through the senses; they are, as he called them, “form of appearance” (“Anschauungsformen” in German), through which the sense data are “filtered”. Rather than being part of our experience of the world, space and time are supplied by our own mind and are necessary “a priori” conditions for having experience at all.

I regard this view as ridiculous. We form the concepts “space” and “time” by observing a variety of special and temporal relationships (“the book is on the table”, “it happened yesterday”, etc. etc.) “Space” and “time” refer to the sum of those relationships.[4]

This should be enough for now (an “ought” and a temporal specification).

$ $ $

(See also Rand Debating Kant and Evil Thoughts?. – Unfortunately (for most of you), almost everything I have written about Kant is in Swedish.)

Update March 29: I have now written a fairly extensive English blog post about Kant.

[1] Scandinavian speaking readers may read my recent blog posts about Axel Hägerström. Or Gastronomi och moral.

[2] There may be exceptions; if you are such an exception, I apologize.

[3] I found this out by perusing (I worked in a library of old books) Anthropologie in pragmatischer Hinsicht (Anthropology from a Pragmatic Point of View). Unfortunately, I cannot find an English translation of this passage in the book.

[4] Neither, by the way, did Kant ever say things like ”it is true, because I feel it’s true” – quite the contrary! In Foundations of the Metaphysics of Morals, he goes to some length explaining that the categorical imperative is not a matter of emotion but of reasoning. And in Religion Within the Boundaries of Mere Reason, one will find – buried among all the talk of selfishness or self-love being the “radical evil” of human nature – the short and simple sentence: “Emotions are not knowledge”.

23 March, 2016

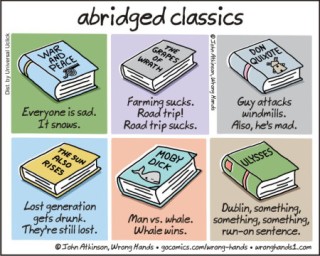

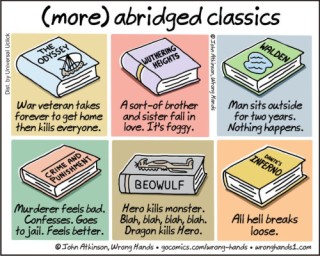

Inspired by Wrong Hands: Cartoons by John Atkinson.

“Who is John Galt?”

“Who is John Galt?”

People start looking for John Galt.

Some people disappear.

John Galt appears; gives speech.

John Galt gets captured and tortured.

John Galt gets rescued.

John Galt and his rescuers take over the world.

And last, but not least:

People read the book, and lives (including mine) are changed.

Others hate it, and their lives remain unchanged.

You must be logged in to post a comment.